Mobile Network Tests

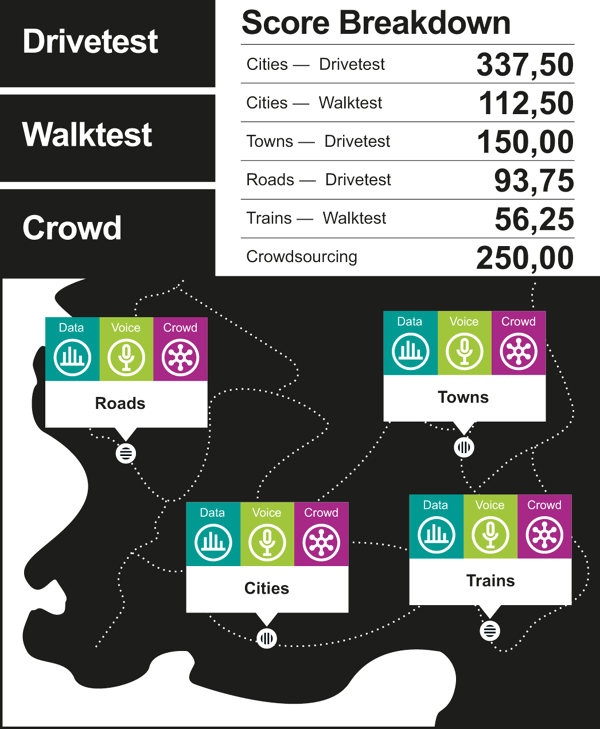

Our benchmarks are the result of a sophisticated methodology, based on drive tests and walk tests as well as on a comprehensive crowdsourcing analysis.

Logistics

connect‘s network test partner umlaut, Part of Accenture, sent four measurement vehicles through the country, each equipped with twelve smartphones. For each network operator, a Samsung Galaxy S21+ took the voice measurements, and another S21+ established the connections for the new test case “conversational app“ (see section “Data connections“ below). In the actual data test, we used a Samsung Galaxy S22+. For all measurements, the smartphones were set to “5G preferred“ – so wherever supported by the network, the data tests took place via 5G.

In addition to the drive tests, two walk test teams carried out measurements on foot in each country, in zones with heavy public traffic such as railway station concourses, airport terminals, cafés, public transport and museums. The walk test programme also included journeys on long-distance railway lines. For the walk tests, the same three smartphone types were used per network operator for the same measurements as in the drive tests. The walk test teams transported the smartphones in backpacks or trolleys equipped with powerful batteries. The firmware of the test smartphones corresponded to the original network operator version in each case.

The drive and walk tests took place between 8 am and 10 pm. For the drive tests, two vehicles were in the same city, but not in the same place, so that one car would not falsify the measurements of the other. On the connecting roads, two vehicles each drove the same routes, but one after the other with some time and distance between them. For the selection of the test routes, umlaut created four different suggestions for each country, from which connect blindly selected a route.

Voice connections

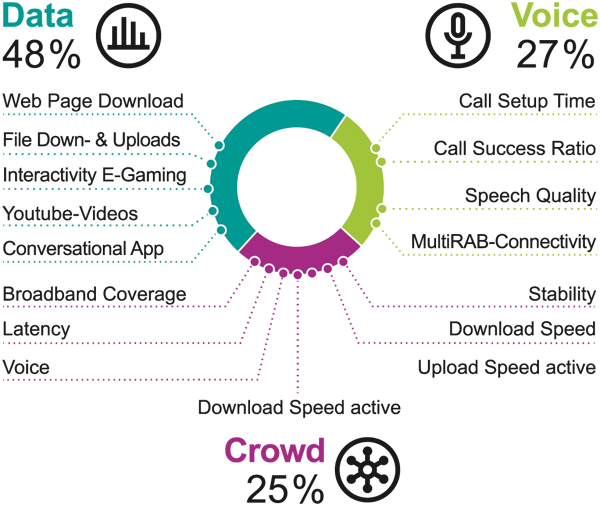

Voice connections account for 27 percent of the overall result. For this purpose, mobile telephone calls were established from vehicle to vehicle („mobile-to-mobile“) and their success rates, call set-up time and voice quality were measured. The smartphones of the walk test teams made calls to a stationary (smartphone) remote station for the voice tests.

To ensure realistic conditions, data traffic was handled simultaneously in the background. We also recorded MultiRAB connectivity: the use of several “radio access bearers“ provides data connections in the background of the voice calls. The transmission quality was evaluated with the POLQA wideband method suitable for HD voice. “VoLTE preferred“ was configured on all phones – from 5G, the phones thus fall back to telephony via LTE.

Data connections

The data measurements account for 48 percent of the total result. Several popular live pages (dynamic) and the ETSI reference page known as the Kepler page (static) were retrieved to assess internet page calls. In addition, 10 MB and 5 MB files were downloaded and uploaded, respectively, in order to determine the performance for smaller data transfers. We also determined the data rate within a 7-second period when uploading and downloading large files. Since Youtube dynamically adjusts the played-out resolution to the available bandwidth, the rating takes into account the average image resolution or line count of the videos, the time to reach full resolution as well as the success rate and time to playback start.

To challenge network performance, the smartphones additionally retrieved videos in 4K (2160p). A typical over-the-top voice connection (OTT) is represented by the test case “conversational app“. For this, we set up a voice channel via the SIP and STUN protocols using the OPUS codec and determined the success rate and voice quality. In addition, our measurements simulated a highly interactive UDP multiplayer session to determine the latency times of the connection and any possible packet losses. This was done in our newly added test point “Interactivity of eGaming“.

Crowdsourcing

Crowdsourcing results accounted for 25 per cent of the overall rating. They show which network performance actually arrives at the user – however, the end devices and tariffs used also have an effect in this.

To obtain the data basis for these analyses, thousands of popular apps recorded the parameters described below in the background – provided the user agreed to the completely anonymous data collection. The measured values were recorded in 15-minute intervals and transmitted to the umlaut servers once a day. The reports contain only a few bytes, so they hardly burden the user‘s data volume.

Broadband Coverage

In order to determine the broadband coverage reach, umlaut laid a grid of 2 x 2 km tiles (“Evaluation Areas“, in short EAs) over the test area. A minimum number of users and measured values had to be available for each EA. For the evaluation, umlaut awarded one point per EA if the network under consideration offered 3G coverage. Three points were awarded if 4G or 5G was available in the EA. The score achieved in this way was divided by the achievable number of points (three points per EA in the “common footprint“ – the area of the respective country covered by all tested providers).

We also looked at the coverage quality. This KPI relates the percentage of EAs where a user had 4G or 5G reception to all EAs in the common footprint.

The time on broadband in turn tells us how often a user had 4G or 5G reception in the period under consideration – regardless of the EAs in which the samples were recorded. For this purpose, umlaut sets the samples that show 4G/5G coverage in relation to the total number of all samples. Important: The percentage values determined for all three parameters reflect the respective degree of fulfilment – and not a percentage of 4G/5G mobile coverage in relation to area or population.

Data rates and Latencies

The passive determination of download data rates and latencies was carried out independently of the EAs and focused on the experience of each user. Samples that were captured via Wi-fi or when flight mode was activated, for example, were filtered out by umlaut before the analysis.

To take into account that many mobile phone tariffs throttle the data rate, umlaut defined three application-related speed classes: Basic internet requires a minimum of 2 Mbit/s, HD video requires 5 Mbit/s and UHD video requires 20 Mbit/s. For a sample to be valid, a minimum amount of data must have flowed in a 15-minute period. Similarly, the latency of the data packets is assigned to an application-related class: Roundtrip times up to 100 ms are sufficient for OTT voice services, less than 50 ms qualify a sample for gaming. In this way, the evaluation also does justice to the fact that the passively observed data rates depend on the applications used in each case. In order to better assess the maximum possible throughput, umlaut also conducted active measurements of upload and download data rates once a month. They determine the amount of data transferred in 3.5 seconds.

In addition to the passive measurements, umlaut also conducts active measurements of upload and download data rates once a month. They determine the amount of data that could be transferred in 3.5 seconds. For the determined values, we consider the average data rate, the P10 value (90% of the values higher than the specified threshold, a good approximation of the typical minimum speed) and the P90 (10% above this threshold), a view at the peak values.

Stability

Based on the determined data rates and additional browsing and connection tests, umlaut also examined when a broadband connection could be used at all. The averaged and weighted results define the percentage of transaction success.

HD Voice

The parameter HD voice shows the proportion of the user‘s voice connections that were established in HD quality – and thus via VoLTE (Voice over LTE). A prerequisite was that the smartphone supports this standard.

Reliability umlaut divided all measured values into basic requirements (“Qualifier KPis“) and values related to peak performance (“Differentiator KPIs“). The presentation of reliability takes into account only the “Qualifier KPIs“ from the voice and data category and the basic KPIs from crowdsourcing.